The glusterFS is an open-source, simple, elastic, and scalable file storage system that is suitable for high data such as media streaming, cloud storage, and the content delivery network (CDN).

We often get a request from our client to install the GlusterFS server on centOS 7 as it is part of our server management plan. You can also take assistance from our technical team support 24/7 for further installation and configuration.

Set up GlusterFS storage on RHEL 7.x and CentOS 7.x

Here we are considering 4 RHEL 7/centOS 7 servers with minimal installation in order to assume the additional disk which is attached to the server to set up the glustesfs.

Add and follow the below lines in the / etc/ hosts file as you considered to have DNS server.

192.xxx.xx.10 server1.com server1

192.xxx.xx.20 server2.com server2

192.xxx.xx.30 server3.com server3

192.xxx.xx.40 server4.com server4

Run the following below commands to set up the gluster repo and EPEL repo.

yum install wget

yum install centos-release-gluster -y

yum install epel-release -y

yum install glusterfs-server -y

Once you have done with the setup, start and enable the GlusterFS services by using the following commands.

systemctl start glusterd

systemctl enable glusterd

Later, allow the port on the firewall so that server can communicate and form the storage cluster. Run the commands.

firewall-cmd --zone=public --add-port=24007-24008/tcp --permanent

firewall-cmd --zone=public --add-port=24009/tcp --permanent

firewall-cmd --zone=public --add-service=nfs --add-service=samba --add-service=samba-client --permanent

firewall-cmd --zone=public --add-port=111/tcp --add-port=139/tcp --add-port=445/tcp --add-port=965/tcp --add-port=2049/tcp --add-port=38465-38469/tcp --add-port=631/tcp --add-port=111/udp --add-port=963/udp --add-port=49152-49251/tcp --permanent

firewall-cmd --reload

Distribute the volume setup:

Let form the trusted storage pool that consists of server 1 and server 2. Then will create the brick on that and after that will create the distributed volume. Assume the raw space of 16 GB to allocate both servers.

Run the commands from the server 12 consoles to form a trusted storage pool with server2.

gluster peer probe server2.comRun commands and check the peer status in server 1.

gluster peer status

Creating brick on server 1.

To set up the brick we need to create the logical volumes on the raw disk.

Run the following command on server 1.

pvcreate /dev/sdb /dev/vg_bricks/dist_brick1 /bricks/dist_brick1 xfs rw,noatime,inode64,nouuid 1 2

vgcreate vg_bricks /dev/sdb

lvcreate -L 14G -T vg_bricks/brickpool1

The above command is the brickpool1 in the name of a thin pool.

Then create the logical volume of 3 GB.

lvcreate -V 3G -T vg_bricks/brickpool1 -n dist_brick1Format the logical volume by using the xfs file system.

mkfs.xfs -i size=512 /dev/vg_bricks/dist_brThen mount the brick using the mount command.

mount /dev/vg_bricks/dist_brick1 /bricks/dist_brick1/If you want to mount it permanently and add the following line in /etc/ fsatb.

/dev/vg_bricks/dist_brick1 /bricks/dist_brick1 xfs rw,noatime,inode64,nouuid 1 2Then create the directory with a brick under the mount point.

mkdir /bricks/dist_brick1/brick

Perform a similar set of commands on server 2.

[root@server2 ~]# pvcreate /dev/sdb ; vgcreate vg_bricks /dev/sdb

[root@server2 ~]# lvcreate -L 14G -T vg_bricks/brickpool2

[root@server2 ~]# lvcreate -V 3G -T vg_bricks/brickpool2 -n dist_brick2

[root@server2 ~]# mkfs.xfs -i size=512 /dev/vg_bricks/dist_brick2

[root@server2 ~]# mkdir -p /bricks/dist_brick2

[root@server2 ~]# mount /dev/vg_bricks/dist_brick2 /bricks/dist_brick2/

[root@server2 ~]# mkdir /bricks/dist_brick2/brick

Follow the below gluster commands and create the distributed volume.

[root@server1 ~]# gluster volume create distvol server1.example.com:/bricks/dist_brick1/brick server2.example.com:/bricks/dist_brick2/brick

[root@server1 ~]# gluster volume start distvol

volume start: distvol: success

[root@server1 ~]#

Verify the volume status. Run the commands.

[root@server1 ~]# gluster volume info distvol

MOUNT DISTRIBUTE VOLUMES ON CLIENT:

First, mount the volume by using the GlusterFS and then ensure the glusterfs-fuse package is installed on the client.

Then log into the client console and run the following commands to install the glusterfs-fuse.

.

yum install glusterfs-fuse -yLater, try to create the mount for distributed volume

mkdir /mnt/distvolMount the ‘distvol’ by using the following commands.

mount -t glusterfs -o acl server1.com:/distvol /mnt/distvol/For the permanent mount, we need to add the below entry in the /etc/fstab file.

server1.com:/distvol /mnt/distvol glusterfs _netdev 0 0Then run the DF command in order to verify the mount status volume.

df -ThReplicating the volume setup.

For the replication, use server 3 and server 4. and then assume the additional disk (/dev/sdb) for glusterfs is already assigned to the server.

Follow the steps to add server 3 and server 4 to the trusted storage pool.

gluster peer probe server3.comgluster peer probe server4.comRun the commands to create and mount the brick on server 3.

pvcreate /dev/sdb ; vgcreate vg_bricks /dev/sdblvcreate -L 14G -T vg_bricks/brickpool4lvcreate -V 3G -T vg_bricks/brickpool4 -n shadow_brick2mkfs.xfs -i size=512 /dev/vg_bricks/shadow_brick2mkdir -p /bricks/shadow_brick2mount /dev/vg_bricks/shadow_brick2 /bricks/shadow_brick2/mkdir /bricks/shadow_brick2/brickFor the permanent mounting, run the below commands.

/dev/vg_bricks/shadow_brick1 /bricks/shadow_brick1/ xfs rw,noatime,inode64,nouuid 1 2Run the commands to create and mount the brick on server 3.

pvcreate /dev/sdb ; vgcreate vg_bricks /dev/sdblvcreate -L 14G -T vg_bricks/brickpool4lvcreate -V 3G -T vg_bricks/brickpool4 -n shadow_brick2mkfs.xfs -i size=512 /dev/vg_bricks/shadow_brick2mkdir -p /bricks/shadow_brick2mount /dev/vg_bricks/shadow_brick2 /bricks/shadow_brick2/mkdir /bricks/shadow_brick2/brickFor the permanent mounting, run the below commands.

/dev/vg_bricks/shadow_brick1 /bricks/shadow_brick1/ xfs rw,noatime,inode64,nouuid 1 2Run the commands to create and mount the brick on server 3.

pvcreate /dev/sdb ; vgcreate vg_bricks /dev/sdblvcreate -L 14G -T vg_bricks/brickpool4lvcreate -V 3G -T vg_bricks/brickpool4 -n shadow_brick2mkfs.xfs -i size=512 /dev/vg_bricks/shadow_brick2mkdir -p /bricks/shadow_brick2mount /dev/vg_bricks/shadow_brick2 /bricks/shadow_brick2/mkdir /bricks/shadow_brick2/brickFor the permanent mounting, run the below commands.

/dev/vg_bricks/shadow_brick1 /bricks/shadow_brick1/ xfs rw,noatime,inode64,nouuid 1 2Run the commands to create and mount the brick on server 3.

pvcreate /dev/sdb ; vgcreate vg_bricks /dev/sdblvcreate -L 14G -T vg_bricks/brickpool4lvcreate -V 3G -T vg_bricks/brickpool4 -n shadow_brick2mkfs.xfs -i size=512 /dev/vg_bricks/shadow_brick2mkdir -p /bricks/shadow_brick2mount /dev/vg_bricks/shadow_brick2 /bricks/shadow_brick2/mkdir /bricks/shadow_brick2/brickFor the permanent mounting, run the below commands.

/dev/vg_bricks/shadow_brick1 /bricks/shadow_brick1/ xfs rw,noatime,inode64,nouuid 1 2Run the commands to create and mount the brick on server 3.

pvcreate /dev/sdb ; vgcreate vg_bricks /dev/sdblvcreate -L 14G -T vg_bricks/brickpool4lvcreate -V 3G -T vg_bricks/brickpool4 -n shadow_brick2mkfs.xfs -i size=512 /dev/vg_bricks/shadow_brick2mkdir -p /bricks/shadow_brick2mount /dev/vg_bricks/shadow_brick2 /bricks/shadow_brick2/mkdir /bricks/shadow_brick2/brickFor the permanent mounting, run the below commands.

/dev/vg_bricks/shadow_brick1 /bricks/shadow_brick1/ xfs rw,noatime,inode64,nouuid 1 2Run the commands to create and mount the brick on server 3.

pvcreate /dev/sdb ; vgcreate vg_bricks /dev/sdblvcreate -L 14G -T vg_bricks/brickpool4lvcreate -V 3G -T vg_bricks/brickpool4 -n shadow_brick2mkfs.xfs -i size=512 /dev/vg_bricks/shadow_brick2mkdir -p /bricks/shadow_brick2mount /dev/vg_bricks/shadow_brick2 /bricks/shadow_brick2/mkdir /bricks/shadow_brick2/brickFor the permanent mounting, run the below commands.

/dev/vg_bricks/shadow_brick1 /bricks/shadow_brick1/ xfs rw,noatime,inode64,nouuid 1 2

Perform a similar set of commands on server 4 for the creation and mounting of the brick by navigating the server 4 console.

pvcreate /dev/sdb ; vgcreate vg_bricks /dev/sdb

lvcreate -L 14G -T vg_bricks/brickpool4

lvcreate -V 3G -T vg_bricks/brickpool4 -n shadow_brick2

mkfs.xfs -i size=512 /dev/vg_bricks/shadow_brick2

mkdir -p /bricks/shadow_brick2

mount /dev/vg_bricks/shadow_brick2 /bricks/shadow_brick2/

mkdir /bricks/shadow_brick2/brick

Use the below gluster commands to create the replicated volume.

gluster volume create shadowvol replica 2

server3.com:/bricks/shadow_brick1/brick

server4.com:/bricks/shadow_brick2/brick

volume create: shadowvol: success: please start the volume to access data.

gluster volume start shadowvolRun the commands to verify the volume info.

gluster volume info shadowvolAdd the below entry in the file “/etc/nfsmount.conf” on both storage server3 and the serevr4.

Defaultvers=3

Reboot both servers. Run following mount commands to volume “shadowvol”.

mount -t nfs -o vers=3 server4.com:/shadowvol /mnt/shadowvol/For permanent mount add the below entry in /etc/fstab file.

server4.com:/shadowvol /mnt/shadowvol/ nfs vers=3 0 0Later, verify the size and the mount status of the volume.

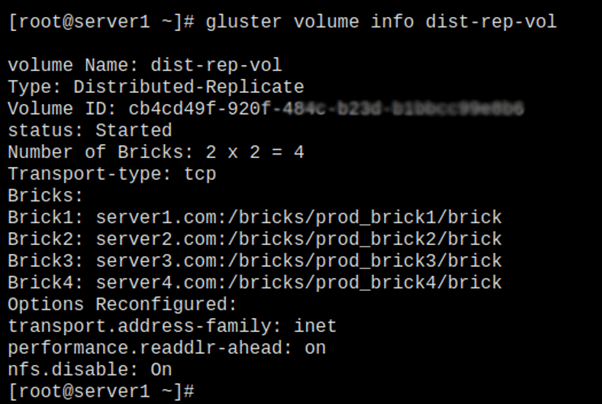

df -ThDistribute- replicate volume setup:

In order to set up the distributed replicated volume use one brick from each server and then form the volume. let’s create the logical volume from the existing thin pool on the corresponding server.

Run the command to create a brick on all 4 servers.

Server 1

lvcreate -V 3G -T vg_bricks/brickpool1 -n prod_brick1mkfs.xfs -i size=512 /dev/vg_bricks/prod_brick1mkdir -p /bricks/prod_brick1mount /dev/vg_bricks/prod_brick1 /bricks/prod_brick1/mkdir /bricks/prod_brick1/brickServer 2

lvcreate -V 3G -T vg_bricks/brickpool2 -n prod_brick2

mkfs.xfs -i size=512 /dev/vg_bricks/prod_brick2

mkdir -p /bricks/prod_brick2

mount /dev/vg_bricks/prod_brick2 /bricks/prod_brick2/

mkdir /bricks/prod_brick2/brickServer 3

lvcreate -V 3G -T vg_bricks/brickpool3 -n prod_brick3mkfs.xfs -i size=512 /dev/vg_bricks/prod_brick3mkdir -p /bricks/prod_brick3mount /dev/vg_bricks/prod_brick3 /bricks/prod_brick3/mkdir /bricks/prod_brick3/brickServer 4

lvcreate -V 3G -T vg_bricks/brickpool4 -n prod_brick4

mkfs.xfs -i size=512 /dev/vg_bricks/prod_brick4

mkdir -p /bricks/prod_brick4

mount /dev/vg_bricks/prod_brick4 /bricks/prod_brick4/

mkdir /bricks/prod_brick4/brickUse the gluster commands and create the volume with the name ‘dist-rep-vol’.

gluster volume create dist-rep-vol replica 2 server1.example.com:/bricks/prod_brick1/brick server2.example.com:/bricks/prod_brick2/brick server3.example.com:/bricks/prod_brick3/brick server4.example.com:/bricks/prod_brick4/brick forcegluster volume start dist-rep-vol

Run and verify the volume.

gluster volume info dist-rep-vol

Therefore, the volume of the first files will be distributed on any of the two bricks and then the files will be replicated into the remaining two bricks.

Now, mount the volume on the client machine via gluster.

Create the mount point to the volume.

mkdir /mnt/dist-rep-volmount.glusterfs server1.com:/dist-rep-vol /mnt/dist-rep-vol/

Later, add the below entry in fstab for the permanent entry.

server1.com:/dist-rep-vol /mnt/dist-rep-vol/ glusterfs _netdev 0 0Run the following df commands and verify the size and volume.

df -Th

conclusion:

This blog will demonstrate how to install and configure the GlusterFS storage on RHEL7.x and CentOS 7.X.You can also take our technical team’s support for further installation and configuration.